MCP with Postgres - Querying my data in plain English

My work profile doesn’t involve being super specialized with database queries. While I need to frequently formulate queries (within code and outside) in one form or the other, I often find myself looking up query syntax and database server specific aspects. So, with the advent of Gen AI, I have been super curious how can I make my life easier writing these queries.

One way to do this is through specialized Text to SQL AI tools like text2sql. But, given that I already pay for ChatGPT and Cursor, I wanted to make my developer IDE do this work for me. In my opinion, folks dealing exhaustively with databases would benefit from specialized Text to SQL AI tools. But, developers like myself may prefer integrating their development IDE to fire these queries for cost and familiarity considerations.

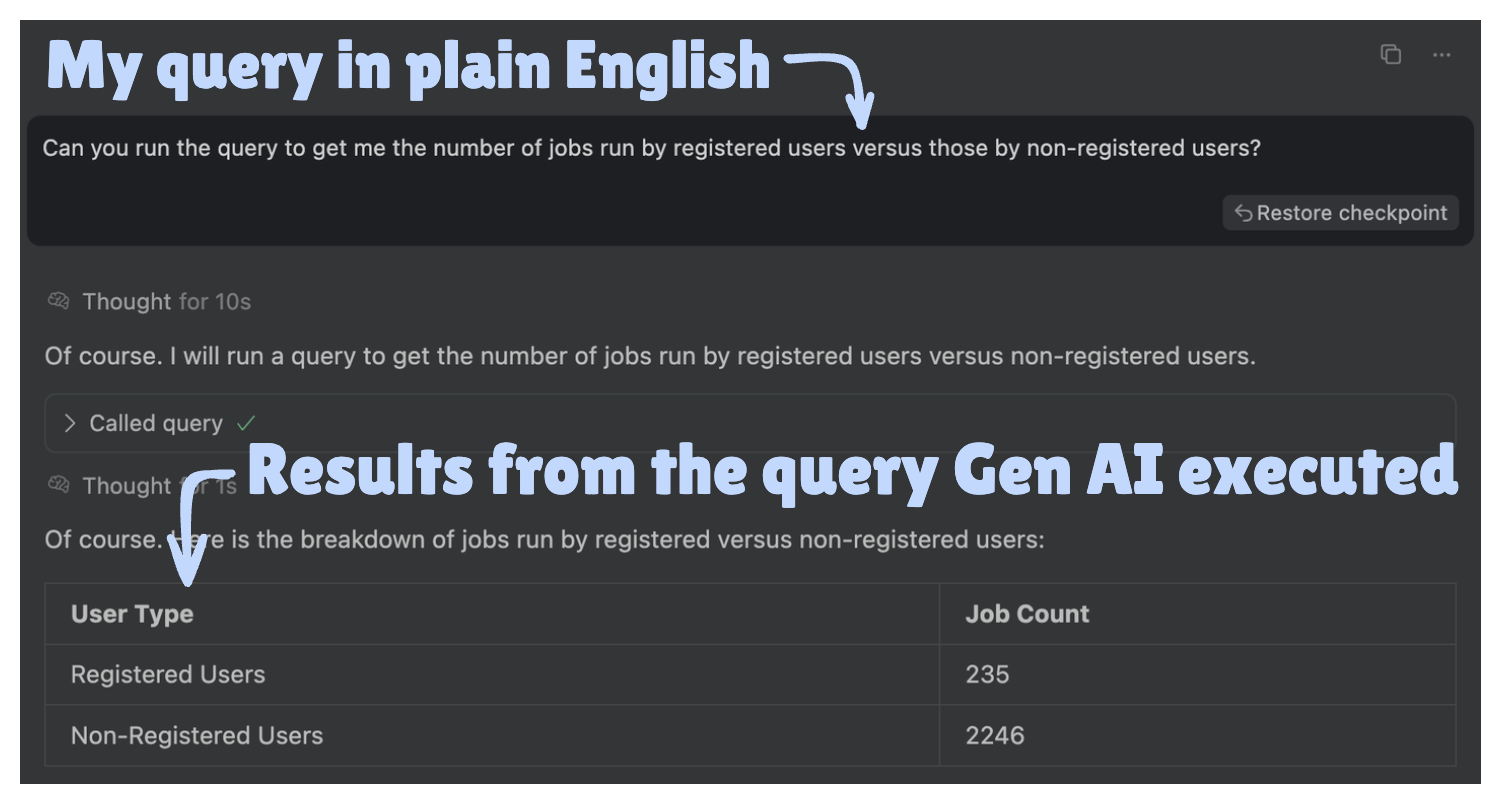

With this background, I wanted to setup Cursor in a way that I could dictate my queries in plain English for it to execute and show me the results.

Here’s a screencast video recording that covers it all:

Or, if you prefer to read the text instead, the following sections cover the same information. The code snippets mentioned in the video recording are also available in the sections below.

1. Setup to make Gen AI write and run queries

There are essentially three things we need to set up so that a Gen AI-based IDE can write and run Postgres queries based on our instructions in human language.

Note: While this setup talks about the Postgres database and Cursor IDE, the same steps would work for any relational database, including MySQL, and any Gen AI enabled development environment that supports MCP integration.

1.1. MCP setup

The MCP server is what connects the Gen AI IDE with the Postgres database so that Gen AI can directly run the queries. This is the PostgreSQL MCP server I used in my current setup. It enables read-only access to the underlying database. Along with the MCP server, we need to provide the database connection string. Below is how my ~/cursor/mcp.json looks like (also accessible via Cmd+Shift+P / Ctrl+Shift+P -> View: Open MCP Settings -> Click New MCP Server):

{

"mcpServers": {

"mcp_demo_db_mcp_server": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-postgres",

"postgresql://<user>:<password>@<host>:<port>/<database>"

]

}

}

}

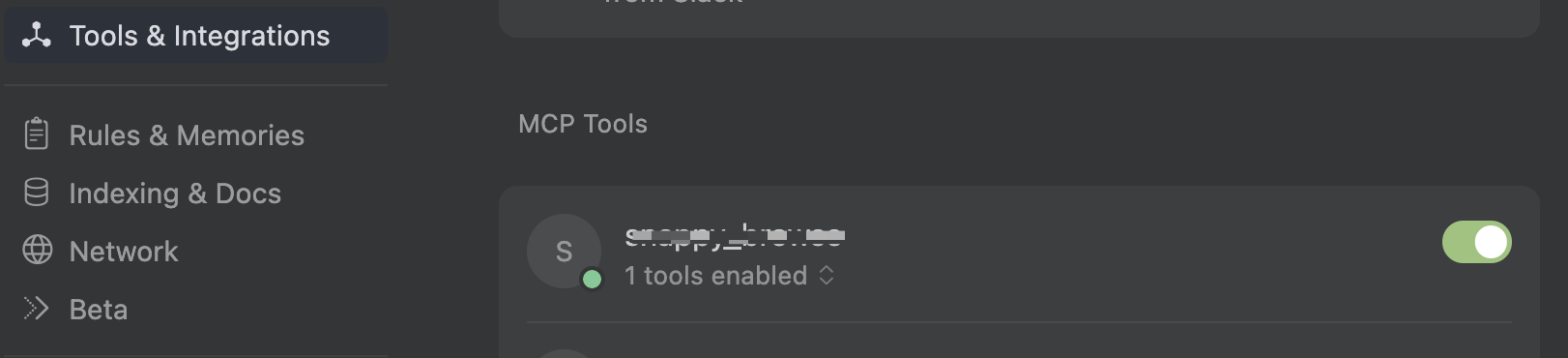

Once my MCP server is adequately set up (a Cursor restart may be required after setting up mcp.json), it should appear like the following on Cursor settings:

I realize that @modelcontextprotocol/server-postgres that I’m using has been deprecated (as of July, 2025). But, there’s an absolute chaos when it comes to reliable MCP servers out there. Looking for an MCP server of any kind yields dozens, but its difficult to check their reliability. I’m waiting for MCP Toolbox for Databases to stabilize for me to use it instead of the current MCP Postgres server.

1.1.1. Be cautious of malicious MCP servers

There are a lot of malicious MCP servers out there. And in this case, we are providing database credentials to the MCP server. As a result, we need to be careful about the credibility of the MCP server we use. Also, I tend to avoid connecting via MCP a production database under any circumstance because we are directly executing queries generated by Gen AI. If you find using a MCP server very risky, you can skip setting up MCP. In that case, you can use Gen AI to generate the queries which you can copy and run as you deem fit.

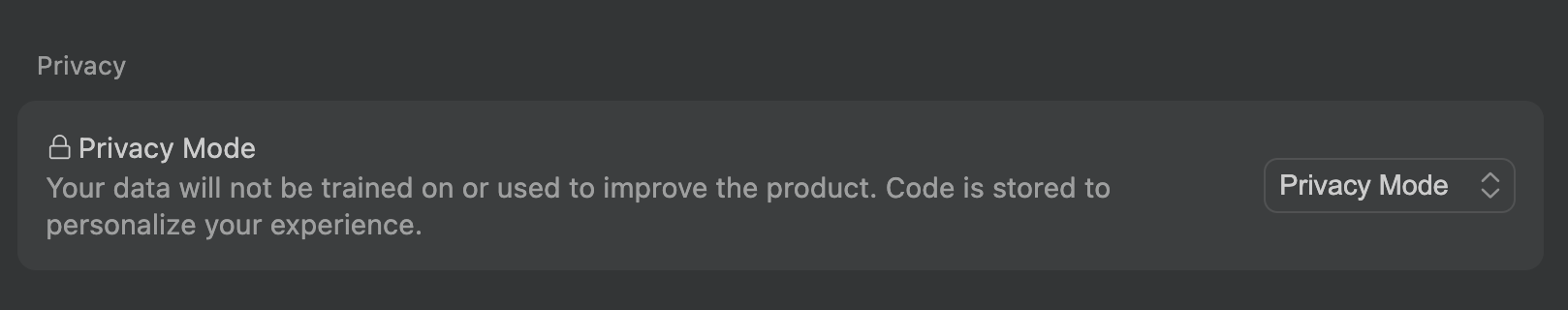

1.1.2. Ensure right privacy settings to avoid leaking data to LLMs

We don’t want LLMs to be trained based on our data. More so if the data is sensitive in nature. To ensure we don’t end up leaking our data to LLMs, it is important to check our IDE privacy policy and settings. With Cursor, Privacy Mode needs to be enabled via Cursor Settings as detailed below:

For other Gen AI IDEs, it is ideal to check privacy policy and settings in prior.

1.2. Explaining database schema to Gen AI

For Gen AI to be able to generate queries for us, we need to feed it our database schema. The following is the shell script (co-authored with Gen AI) I use to extract the database schema from an existing database:

#!/bin/bash

# Customize these:

DB_NAME="<databaesname>"

DB_USER="<databaseuser>"

# Output file

OUTFILE="<basepath>/db-schema.sql"

echo "-- === Tables and Columns ===" > "$OUTFILE"

psql -U "$DB_USER" -d "$DB_NAME" -Atc "

SELECT

'-- Table: ' || table_name || E'\n-- Columns: ' ||

string_agg(column_name || ' (' || data_type || ')', ', ')

FROM information_schema.columns

WHERE table_schema = 'public'

GROUP BY table_name

ORDER BY table_name;" >> "$OUTFILE"

echo -e "\n-- === Foreign Keys ===" >> "$OUTFILE"

psql -U "$DB_USER" -d "$DB_NAME" -Atc "

SELECT

'-- ' || tc.table_name || '.' || kcu.column_name || ' → ' || ccu.table_name || '.' || ccu.column_name

FROM

information_schema.table_constraints AS tc

JOIN information_schema.key_column_usage AS kcu

ON tc.constraint_name = kcu.constraint_name AND tc.table_schema = kcu.table_schema

JOIN information_schema.constraint_column_usage AS ccu

ON ccu.constraint_name = tc.constraint_name AND ccu.table_schema = tc.table_schema

WHERE tc.constraint_type = 'FOREIGN KEY' AND tc.table_schema = 'public'

ORDER BY tc.table_name;" >> "$OUTFILE"

echo -e "\n-- === Indexes ===" >> "$OUTFILE"

psql -U "$DB_USER" -d "$DB_NAME" -Atc "

SELECT

'-- ' || t.relname || ': ' || i.relname ||

CASE ix.indisunique WHEN true THEN ' (UNIQUE)' ELSE '' END ||

' on columns: ' || string_agg(a.attname, ', ')

FROM pg_class t

JOIN pg_index ix ON t.oid = ix.indrelid

JOIN pg_class i ON i.oid = ix.indexrelid

JOIN pg_attribute a ON a.attrelid = t.oid AND a.attnum = ANY(ix.indkey)

JOIN pg_namespace ns ON ns.oid = t.relnamespace

WHERE ns.nspname = 'public'

GROUP BY t.relname, i.relname, ix.indisunique

ORDER BY t.relname, i.relname;" >> "$OUTFILE"

echo -e "\n-- === JSONB Field Structures (sample-based) ===" >> "$OUTFILE"

# Find all jsonb fields and loop through them.

# This uses a `while read` loop which is more portable than `mapfile` (which fails on default macOS bash).

psql -U "$DB_USER" -d "$DB_NAME" -Atc "

SELECT table_name, column_name

FROM information_schema.columns

WHERE data_type = 'jsonb' AND table_schema = 'public'

ORDER BY table_name, column_name;" |

while IFS='|' read -r table column; do

# Skip any empty lines that might result from the pipe

if [ -z "$table" ]; then continue; fi

echo -e "\n-- $table.$column (jsonb) sample structure:" >> "$OUTFILE"

# This advanced query explores the JSONB structure down to level 2,

# and outputs a special format that the awk script below can parse for pretty-printing.

psql -U "$DB_USER" -d "$DB_NAME" -Atc "

WITH source_rows AS MATERIALIZED (

SELECT \"$column\"

FROM \"$table\"

WHERE \"$column\" IS NOT NULL

AND jsonb_typeof(\"$column\") IN ('object', 'array')

LIMIT 500 -- Process up to 500 rows to find a good sample

),

unnested_items AS (

-- Handle rows where the column is a single JSON object

SELECT \"$column\" AS item FROM source_rows WHERE jsonb_typeof(\"$column\") = 'object'

UNION ALL

-- Handle rows where the column is a JSON array, and unnest it

SELECT jsonb_array_elements(\"$column\") AS item FROM source_rows WHERE jsonb_typeof(\"$column\") = 'array'

),

level_1_pairs AS (

-- Get all level 1 key-value pairs by using jsonb_each() on each item.

SELECT key, value

FROM unnested_items, jsonb_each(item)

WHERE item IS NOT NULL AND jsonb_typeof(item) = 'object'

)

-- Start final output generation

SELECT DISTINCT path || '|' || is_expandable::text FROM (

-- Level 1 Keys

SELECT

key AS path,

(jsonb_typeof(value) IN ('object', 'array')) AS is_expandable

FROM level_1_pairs

UNION ALL

-- Level 2 Keys from nested objects

SELECT

p1.key || '.' || p2 AS path,

false AS is_expandable -- Stop at level 2

FROM level_1_pairs p1, jsonb_object_keys(p1.value) p2

WHERE jsonb_typeof(p1.value) = 'object'

UNION ALL

-- Level 2 keys from nested arrays of objects

SELECT

p1.key || '.' || p2 AS path,

false AS is_expandable

FROM

level_1_pairs p1,

jsonb_array_elements(p1.value) AS arr_item,

jsonb_object_keys(arr_item) AS p2

WHERE jsonb_typeof(p1.value) = 'array' AND jsonb_typeof(arr_item) = 'object'

) AS final_paths

ORDER BY 1;

" | awk '

BEGIN { FS = "|"; }

{

split($1, parts, ".");

parent = parts[1];

is_parent_line = (length(parts) == 1);

is_expandable = ($2 == "t");

if (is_parent_line) {

if (!processed_parents[parent]) {

if (is_expandable) {

print "-- - " parent ":";

processed_parents[parent] = 1;

} else {

print "-- - " parent;

}

}

} else { # This is a child line

child = parts[2];

# Ensure parent is printed with a colon

if (!processed_parents[parent]) {

print "-- - " parent ":";

processed_parents[parent] = 1;

}

print "-- - " child;

}

}

' >> "$OUTFILE"

done

echo "✅ Schema with JSONB structure saved to: $OUTFILE"

The shell script above generates a file like the following containing tables, columns, foreign keys, indexes, and JSON structures for JSONB fields.

-- === Tables and Columns ===

-- Table: tasks

-- Columns: input_info (jsonb), expired_at (timestamp with time zone), status (jsonb), user_id (character varying), task_name (character varying), transfer_in_progress (boolean), id (integer)

-- Table: users

-- Columns: display_name (character varying), user_id (character varying), email (character varying), id (integer)

-- === Foreign Keys ===

-- tasks.user_id → users.user_id

-- === Indexes ===

-- tasks: idx_tasks_user_id on columns: user_id

-- users: users_user_id_key (UNIQUE) on columns: user_id

-- === JSONB Field Structures (sample-based) ===

-- tasks.input_info (jsonb) sample structure:

-- - dType

-- - href

-- tasks.status (jsonb) sample structure:

-- - error

-- - internalError

-- - internalErrorAdditionalInfo

-- - userError

-- - queueJobId

-- - queueJobInfo

-- - output:

-- - additionalInfoType

-- - additionalInfo

-- - clusterResult

-- - firstloadTimeTaken

-- - enabledAdditionalInfoType

-- - enabledAdditionalInfo

-- - enabledConfigs

-- - enabledHref

-- - href

-- - state

The above file will be fed to Gen AI model for it to do the plain English to SQL transformation.

1.3. Explaining database design via example queries to Gen AI

Along with the database schema, we need to provide a database design so the Gen AI can understand the various fields (their meaning, possible values, etc.). However, in the various places I have worked, I have often found the database design document to be out-of-date or incomplete. In the absence of such a document, we can create a file containing example queries with a brief description like the following:

-- Query to get URLs for all the failed tasks

SELECT id, created_at, input_info->>'href' AS url FROM tasks

WHERE status ? 'error' order by id desc;

-- Query to fetch URLs for all tasks run by a certain user

SELECT id, created_at, input_info->>'href' AS url FROM tasks

where user_id = (select id from users where email = '<email-address>')

While the above is a super-small sample, the more example queries we can provide, the better the Gen AI’s text-to-SQL transformation will be.

In my experience, an example query document is an very decent input for Gen AI model in absence of a decent database design document. Also, this example query document can be incrementally improved to help improve Gen AI’s capabilities.

2. Querying the data in plain English

With the above three items set up adequately, I am able to query databases consisting of about a hundred tables. So, an instruction like the following in Cursor readily results in the data I want to retrieve.

@db-schema.sql @example-queries.sql Run the query

to fetch me day wise the percentages of tasks

that failed for last two weeks.

We can also ask Gen AI to keep adding the new queries it generates to our @example-queries.sql file. This helps with the incremental improvement in Gen AI’s plain English to SQL transformation capability.

3. When it works well and when it doesn’t

The setup described in this post works well when trying to write exploratory queries for ad-hoc data requirements. It also works well to obtain initial version of certain queries that we can then pick-up and modify to our specific needs. I found the setup’s capability to directly correlate with the quality of queries present in the example-queries.sql document.

I found this setup to be less useful when trying to optimize a query or when trying to use a specific querying feature (example - recursive CTE for a hierarchy). In such cases, rather than feeding it a lot of inputs, I preferred to obtain the initial version of the query and then manually work through the changes I wanted. These were also the queries that were complicated for me to write, verify, and gradually improve. So, I preferred manual maneuvering (with inputs from Gen AI) rather than one-shotting with Gen AI to ensure accuracy.

Got a web-dev pain point?

I'm an independent web dev & architect.

What I do:

- Architecture - Guidance on frameworks, tools, system design, cloud infra

- Fullstack Dev - Frontend, backend, CMS customization, migration, upgrade

- Optimization - Core web vitals, scalability, SEO compliance

Tech I use:

Node.js, React, Next.js, Postgres, Strapi, Directus, MySQL, AWS, GCP, Serverless (Lambda, CF Workers), ElasticSearch, Redis, RabbitMQ, Kibana

My Clients Include:

Or email: punit@tezify.com

I'm an independent web dev & architect.

What I do:

- Architecture - Guidance on frameworks, tools, system design, cloud infra

- Fullstack Dev - Frontend, backend, CMS customization, migration, upgrade

- Optimization - Core web vitals, scalability, SEO compliance

Tech I use:

Node.js, React, Next.js, Postgres, Strapi, Directus, MySQL, AWS, GCP, Serverless (Lambda, CF Workers), ElasticSearch, Redis, RabbitMQ, Kibana

My Clients include:

Or email : punit@tezify.com

Hire Me

Hire Me