Mitigating serverless cold start delays : My experiments & observations

Additional Context : Serverless is a vast area. This post specifically evaluates if frequently pinging one or more Urls is a reliable approach to mitigate cold start delays for websites hosted on Node based serverless platforms like Vercel or Netlify.

Update (Jan, 2026) : I have undertaken a thorough comparison of cold start delays for Vercel, Netlify and Cloudflare when serving a Nextjs v16 project. See the outcomes here.

1. Objective

Our team wanted to evaluate if their Next.js website could be served via any of the available serverless platforms. We saw that Vercel and Netlify are two popular platforms that offered great DX and could serve our Next.js frontend without requiring any serverless platform specific modifications to our code.

Our Next.js frontend serves a few statically generated routes and a few server-side generated routes. It also serves around a dozen API routes.

I was tasked to determine the speed and scalability impact of serving our website via a serverless platform like Vercel or Netlify.

2. Serverless cold start delays

Given the speed-sensitive nature of our website (eCommerce), one of my primary concerns was to check if cold start delays could affect our site-speed (specifically, the server-response speed or the time-to-first-buffer).

I had read that cold starts could be prevented by frequently pinging one or more website urls to keep our serverless functions warm. So, I designed an experiment to evaluate if we could mitigate cold start delays when deploying our website on Vercel or Netlify.

3. Experiment details

3.1 Frontend code

I had undertaken the original experiment with our actual website frontend code (my client team’s private repo). Since I cannot share that code, I re-ran the same experiment with a modified version of Next.js commerce example for the purpose of this blog post.

Following are the relevant changes made to the forked version of the Next.js commerce example code:

- Modified some pages to be server-side rendered.

- Added a bunch of React libraries like

react-data-gridandformikto some server-side routes (but not all server-side rendered routes). - Added two API routes. Added

axiosto one of them while not to another. - Added logic to detect if a request was served from a cold-started or an already warm runtime.

The repo was deployed on both Vercel and Netlify (free tier plan).

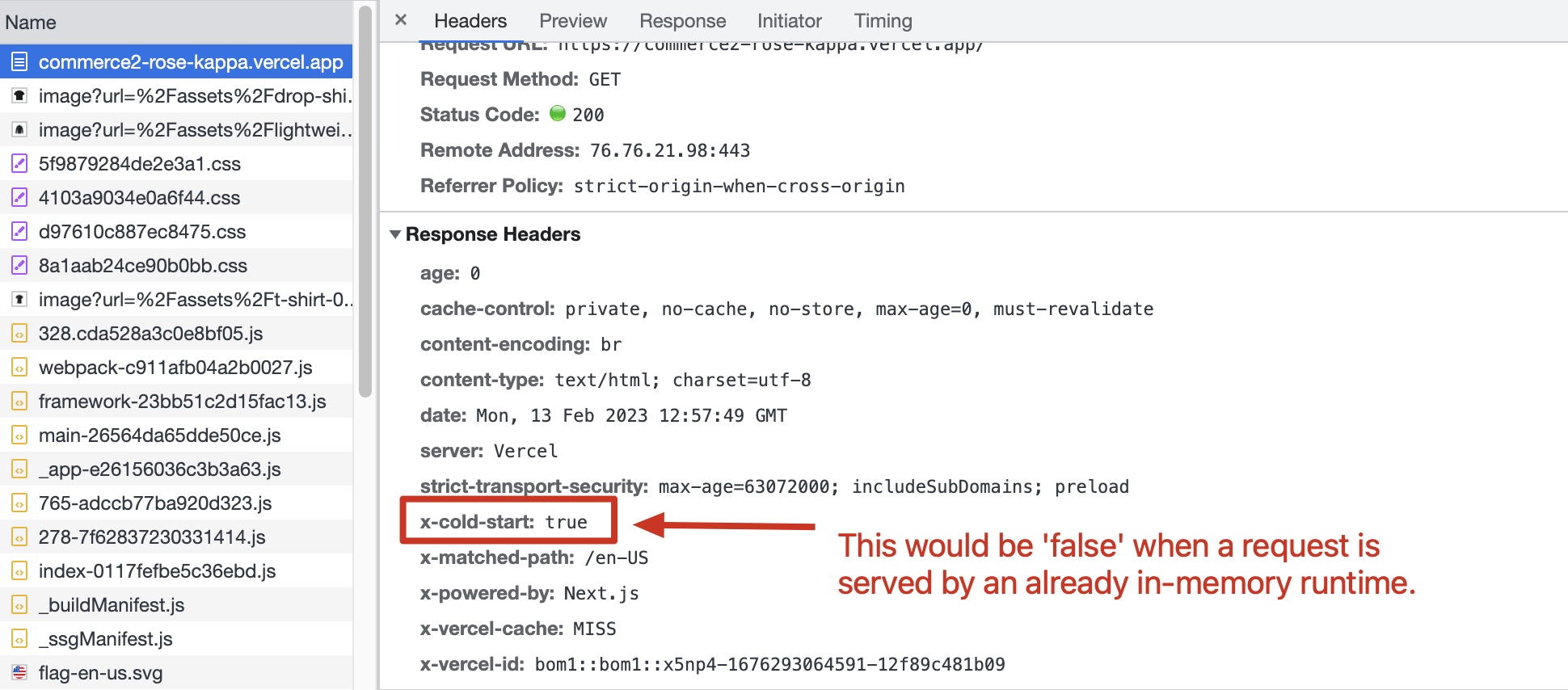

3.2 Detecting if a request is served via a cold-started or a warm runtime

I set up a mechanism so that the server-response would send back a header x-lambda-cold as true or false to tell us if a certain request was served from a cold started or an already running runtime.

To determine the correct value for this response header, a global in-memory object is maintained whose value can be set / get across requests. By accessing this global object, a request can determine if they are the first request to be served by the current runtime or no. Here and here are the relevant parts for this logic.

3.3 Test script

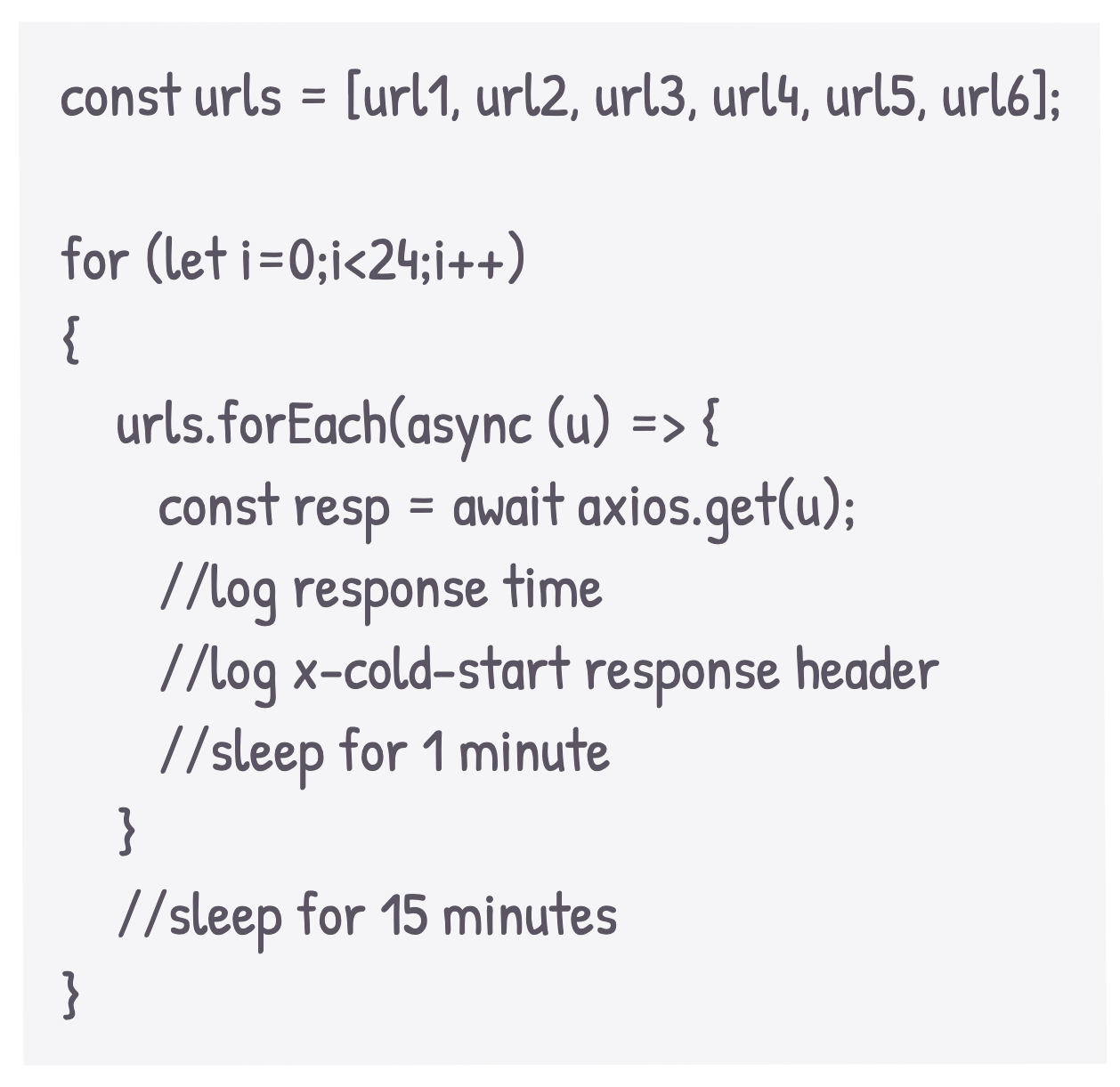

I created a simple script that fires axios.get() requests to the six distinct urls (four server-side rendered routes and two api urls) of the frontend hosted on Vercel and Netlify platforms.

- These requests are made sequentially, with a gap of 1 minute between consecutive requests.

- The script makes a batch of such six requests 24 times, with a gap of 15 minutes between every batch.

- The first url to be hit in each of the 24 iterations is rotated between the six urls.

4. Experiment results

During the 24 iterations, the first request of an iteration was always served by a cold-started runtime. This was expected. The unexpected part - more than 30% consecutive requests were also served by a cold-started runtime. This happened for the frontend code deployed on both the serverless platforms - Netlify and Vercel. The two charts below detail this:

As expected, every time a request was served by a cold-started runtime, it slowed the server-response time on both the platforms.

5. Why do the consecutive requests result in a cold-start?

When six distinct urls for our website are sequentially hit at the gap of 1 minute, I expected the first request to result in a cold-start, but not the later requests. To understand this behavior better, I tried to understand how Vercel and Netlify deployed our website code.

Both Vercel (source) and Netlify (source) leverage AWS lambda for their serverless offering. From the details here and here, it appears that a single website output bundle is split and deployed across multiple AWS lambdas. So, two urls for a website - https://xyz.com/route_1 and https://xyz.com/route_2 can potentially be served by different lambda functions. And, pinging https://xyz.com/route_1 will not keep the lambda for https://xyz.com/route_2 warm. So, unless we track how our website routes are grouped & deployed across different lambdas, we cannot reliably keep all our routes in-memory by pinging the urls.

Serverless platforms split and deploy our single large output bundle across multiple lambdas because function size affects the cold start times and how long the functions are retained in memory. So, forcing all our routes into a single lambda may introduce other cold start delay issues.

6. Conclusion

From the experiment results, I concluded that frequently pinging one or more website urls is not a reliable approach to mitigate cold start delays (also see this). As a result, deploying our website on Vercel, Netlify or a similar Node based serverless platform may potentially result in slow server-response speed for those server-side rendered routes and API end-points that aren’t requested frequently.

I also arrived at the following conclusions:

- Because of the high startup time for Node, any serverless platform that leverages the Node runtime may potentially suffer from cold start delays. As a result, like we track the

cache-hit ratioto determine effectiveness of a caching solution, any approach to mitigate cold start delays should be tracked viacold start to warm cache ratio. - Serverless platforms based on alternate runtimes (like Chrome isolates, WebAssembly) that minimize cold-start delays to single digit milliseconds may suit better to serve speed-sensitive requests. But, platform-specific code changes required for such platforms can result in friction of moving to or from such platforms.

Want a fast frontend that scales?

I've built and improved B2C frontends serving 2M+ monthly users to:

- Optimize Core Web Vitals

- Solve scalability issues

- Implement SEO compliance

- Select the right framework, library and hosting platform

- Incrementally migrate or upgrade frontend frameworks

B2C clients Include:

Or email: punit@tezify.com

- Solve scalability issues

- Optimize Core Web Vitals

- Implement SEO compliance

- Select the right framework, library and hosting platform

- Incrementally migrate or upgrade frontend frameworks

Hire Me

Hire Me