How we prevented CDN bandwidth abuse & mitigated AWS billing spike

A few weeks ago, at one of my clients, our AWS billing showed a 20% rise for the previous day as compared to the earlier days in the week. Having experienced a few AWS billing nightmares in the past, we actively watch for any unusual increase in our AWS resources consumption & billing. From a quick look of things, we attributed this billing increase to an increase in our Cloudfront bandwidth consumption. On a typical day, our Cloudfront bandwidth consumption is around ~950 Giga Bytes a day and on this particular day, the consumption had jumped to ~1600 Giga Bytes. Having seen such increases every once-in-a-while in the past, we assumed the increased bandwidth consumption could be from some of our content (blog posts, photos or videos) going viral or one of our marketing campaigns hitting a sweet spot.

And, the day after the increase, we observed the bandwidth consumption around ~1300 Giga Bytes. Again, we quickly assumed that whatever went viral was probably cooling off. But, on the third day, our CDN bandwidth consumption spiked to ~3700 Giga Bytes. At $0.109 per GB data transfer cost, we were looking at $300 worth of extra spend per-day. This was an increase high enough to demand our immediate attention to identify the cause of a jump in the bandwidth consumption.

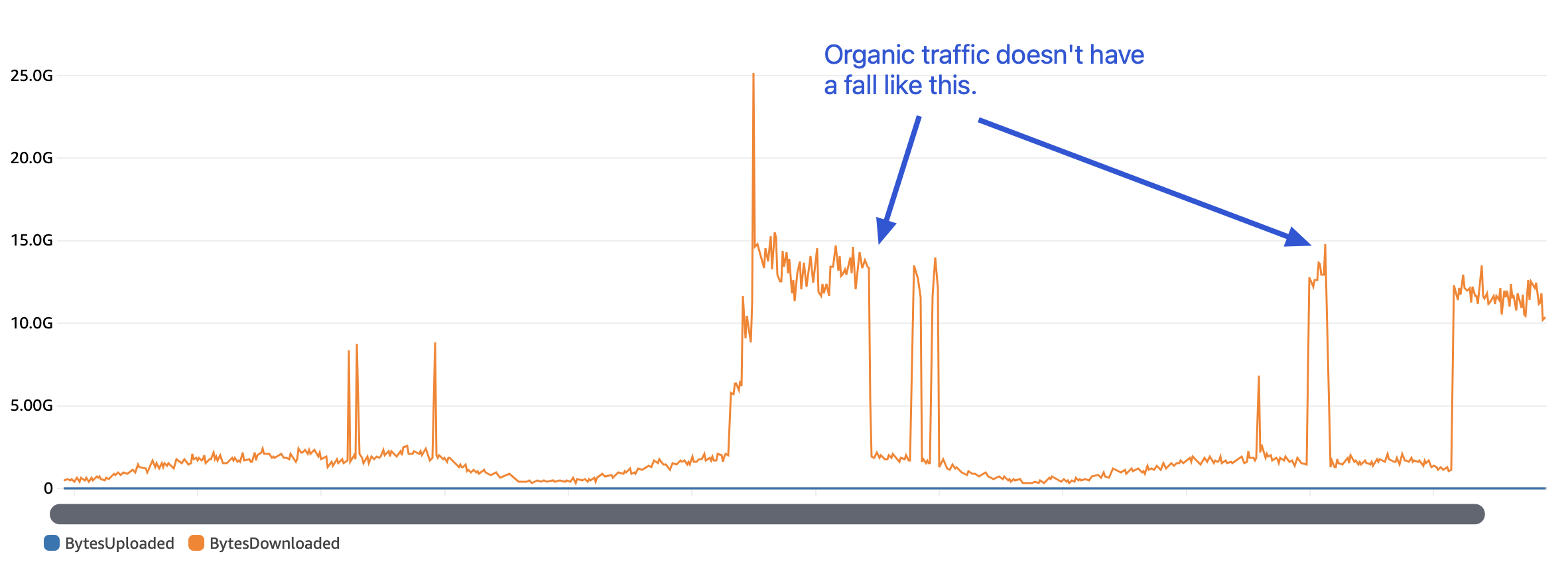

As we looked at the bandwidth consumption for our CDN distributions on Cloudwatch by the hour, we observed abrupt increase and drops. With viral campaigns, the drops could not be this abrupt. This was the first time we thought this could be due to someone trying to abuse one of our CDN endpoints.

Rate Limiter

An immediate solution was to put in place a AWS WAF based rate-limiter for the concerned CDN distribution (number of requests per 5 minute blocks per IP address). This brought down our data transfer numbers. At this point, we thought we should be done with the issue.

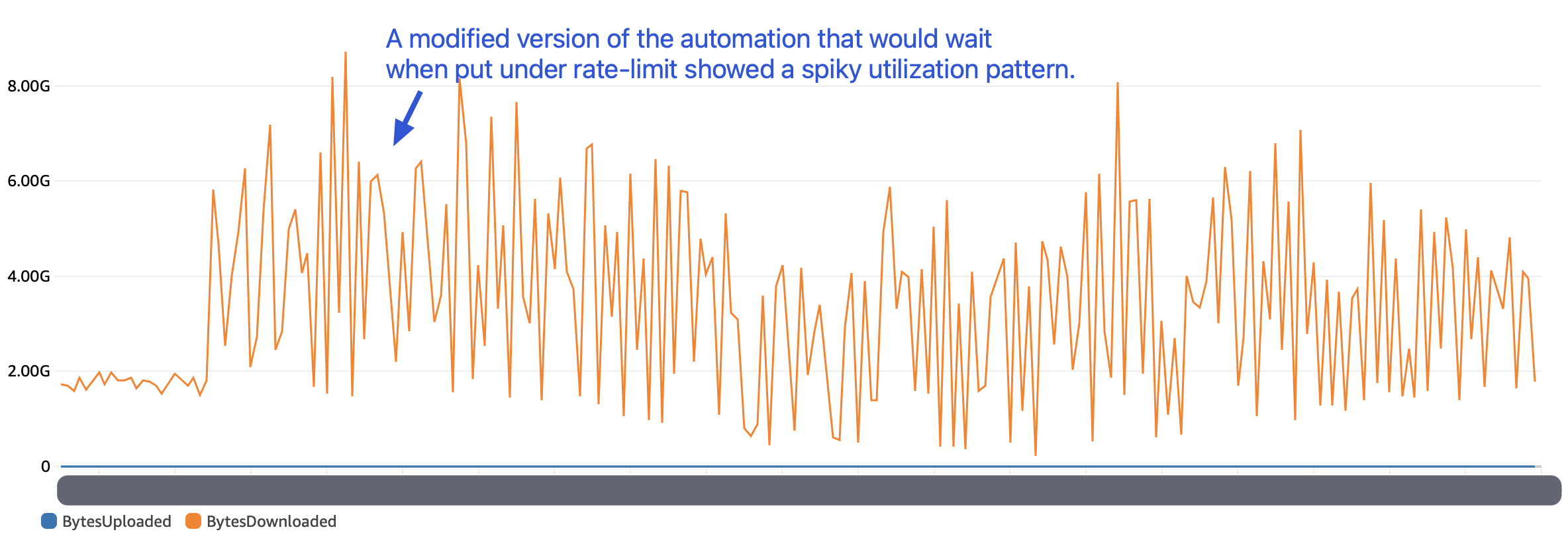

However, after around 8 hours of putting this change in place, we started to see a different kind of pattern for our CDN endpoint data transfer bandwidth consumption. Closely observing the trend, it was visible that whosoever was abusing our CDN endpoint was now waiting for a few minutes once they hit the rate-limit.

With this, it was clear that this was someone specifically and systematically trying to abuse our CDN end-point. And, this meant we may have to put better safeguards in place and watch for the changing nature of abuse in the coming days & weeks.

CDN Access Logs

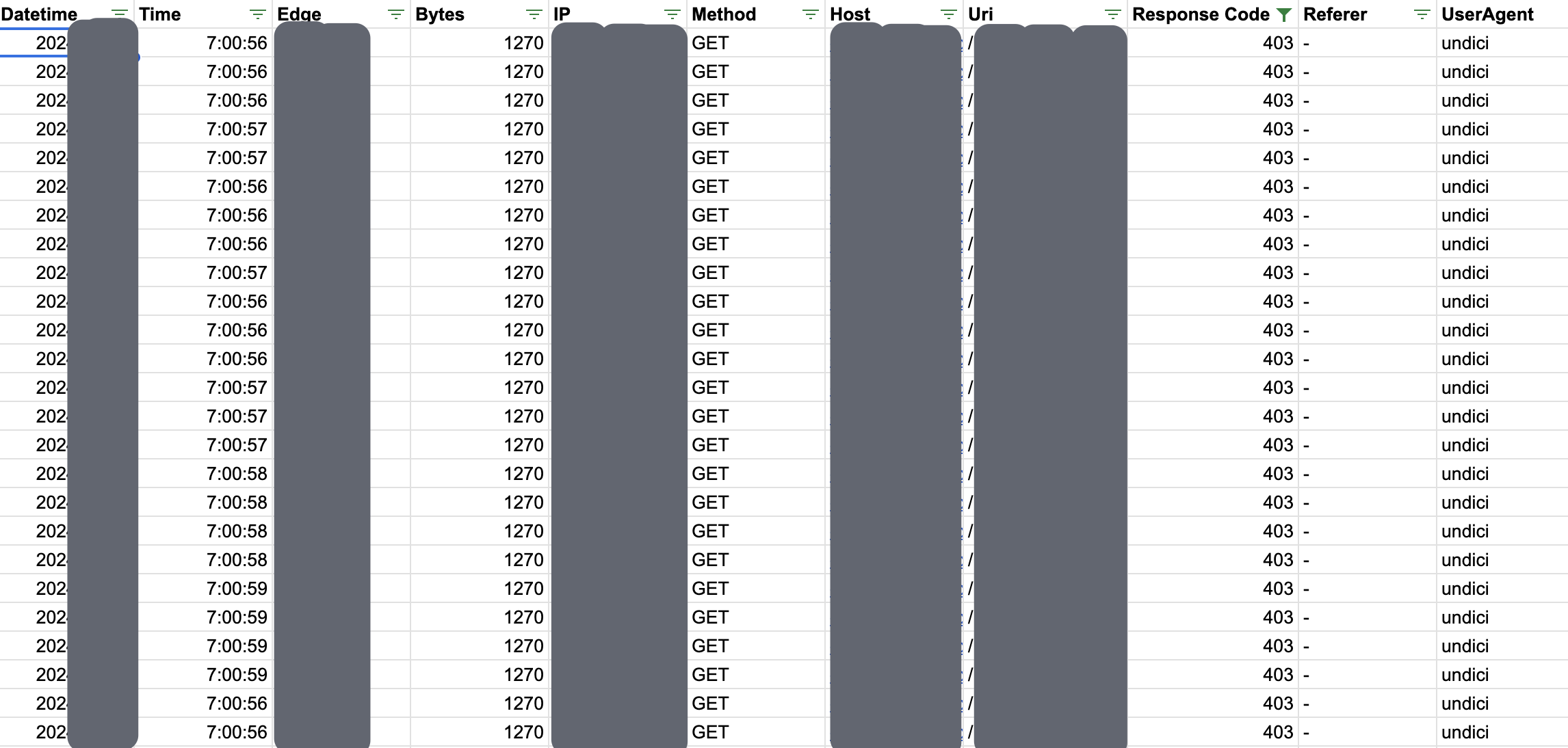

To understand the nature of requests being made to our CDN endpoint, we enabled access logs for our CDN for a few minutes. We were able to load up part of these logs in a sheet to delve deeper:

Filtering these logs to the requests that were blocked by the AWS WAF rate-limiter, we understood the following:

- The abuser had obtained valid static asset urls via a specific public API endpoint and was cycling among these (relatively large number of) static asset urls.

- The abuser was leveraging Google Cloud (Mumbai) infrastructure to fetch the data.

- The abuser was using

node-fetchto fetch the data (UserAgent : undici).

User-Agent

To buy some time, we blocked the user-agent undici. This was trivial to execute since we do not anticipate the actual users of our website to be using node-fetch. However, as expected, the abuser changed the UserAgent to a commonly used string to disallow us from blocking requests based on UserAgent.

IP Addresses

To buy some more time, we blocked the specific IP addresses making the requests. This resulted in a cat-and-mouse game as the abuser kept on changing away from the IP addresses we blocked. But, with the rate-limiter in place and the blocking of IP-addresses, we got the needed time to formulate a long-term mechanism to prevent the abuse.

Long-term Safeguards

Our long-term safeguard to prevent this (and the future) abuses was to block any requests coming from the various cloud providers. The concerned CDN endpoint served static files requested from our visitors' browsers and apps. So, we did not expect real visitor requests to come from AWS, GCP, Azure, etc. So, blocking requests coming from the various cloud providers would not affect our organic traffic. However, it could potentially block the following:

- various search bot requests that we seek to serve to search index our content

- a few automation tools & services we use (which may be hosted on cloud infrastructure)

To work through the above approach, we undertook the following:

- The major cloud providers publish the IP ranges for their infrastructure (eg: AWS, GCP, Azure, DigitalOcean). We blocked these CIDRs.

- The major search bots publish their IP ranges (eg : GoogleBot, BingBot). We whitelisted these IP addresses.

- We also white-listed the IP addresses of the services & automation tools we use.

In addition to above, we setup AWS Cloudwatch alerts (#of requests made per hour, BytesDownloaded per hour) to catch any future abuses that may escape our safeguards. We also continue to have the AWS WAF rate-limits in place.

Limitations

With the above safeguards in place, we haven’t seen the abusers return. However, we are currently aware of the following limitations of our safeguards. We seek to address these in the future:

- The abuser may leverage a cloud provider we may not have blocked. We hope that with our alerts, we should be able to detect such abuses to strengthen our blacklisted IP ranges.

- We periodically need to update our blacklisted and whitelisted ranges of IPs. We are currently looking for ways to automate this process (suggestions welcome).

Conclusion

A limitation of usage-based billing is a potential of experiencing billing shocks. One way to limit such shocks is to have some rate-limiting in place for every public end-point to avoid abuse (in case of static responses) or denial of service (in case of non-static responses).

Additionally, one way to prevent the scripts running in the wild from abusing our public end-points is to make them inaccessible from the various cloud providers' infrastructure.

I'm not a Cloud Infrastructure specialist. But, my experience as an architect working with various teams has allowed me to execute projects at the intersection of software development and infrastructure.

Some highlights of my SRE work include architecting scalable backends, implementing observability, improving cache-hit ratios, and optimizing cloud setups for cost efficiency without sacrificing performance.

Read my other posts on Site Reliability Engineering