How to integrate Elasticsearch with Strapi

1. Goal

The goal of this post is to provide the implementation specifics to integrate Elasticsearch with Strapi so that:

- Any content entered within Strapi can be indexed via Elasticsearch.

- Elasticsearch indexing can be triggered asynchronously via Strapi.

- A Strapi CMS API is available to front the Elasticsearch search API.

At the end of the post, we also look at a few enhancements to improve upon our Strapi - Elasticsearch integration.

2. Setting up Strapi & Elasticsearch

Before getting into the implementation specifics, we need to have both Strapi and Elasticsearch running. We also need to install the Elasticsearch’s Javascript client library @elastic/elasticsearch:

- Let’s create a new strapi project with

yarn create strapi-app strapi-integrate-elasticsearchto have a fresh running strapi instance. I usedQuickstart (recommended)installation type for the purpose of this post.

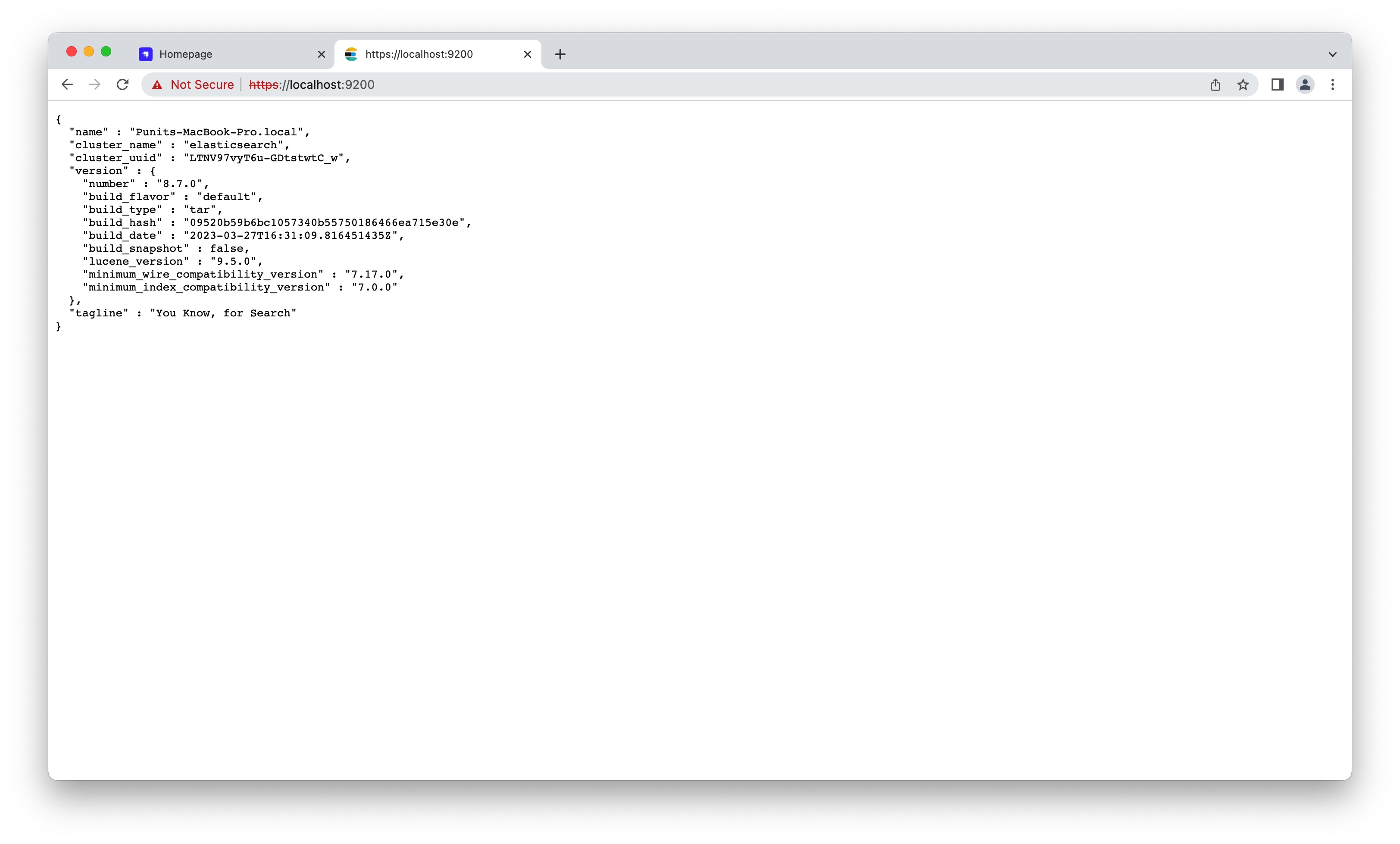

- Next up, let’s install Elasticsearch via their installable packages available here. On my MacOS, I installed my Elasticsearch at

/opt/elasticsearch-8.7.0. - With Elasticsearch installed at

/opt/elasticsearch-8.7.0, I ran Elasticsearch by typing the following on the command-line:/opt/elasticsearch-8.7.0/bin/elasticsearch. - Let’s note down the credentials from the terminal when Elasticsearch runs the first time. These need to be used to login at

https://localhost:9200(ignore the SSL warning) to view the following screen:

- Finally, within our Strapi project, let’s install the

@elastic/elasticsearchlibrary. This is Elasticsearch’s JavaScript library that we plan to use to invoke Elasticsearch APIs.

yarn add @elastic/elasticsearch

3. Identifying the collections & fields to be indexed

Our Strapi CMS setup may have hundreds of collections, each with dozens of fields. Out of these, we need to know the collections and the fields that need to be searchable. We also need to know the list of fields we want to return as part of the search match results. Typically, this should be part of the business requirements for the search feature.

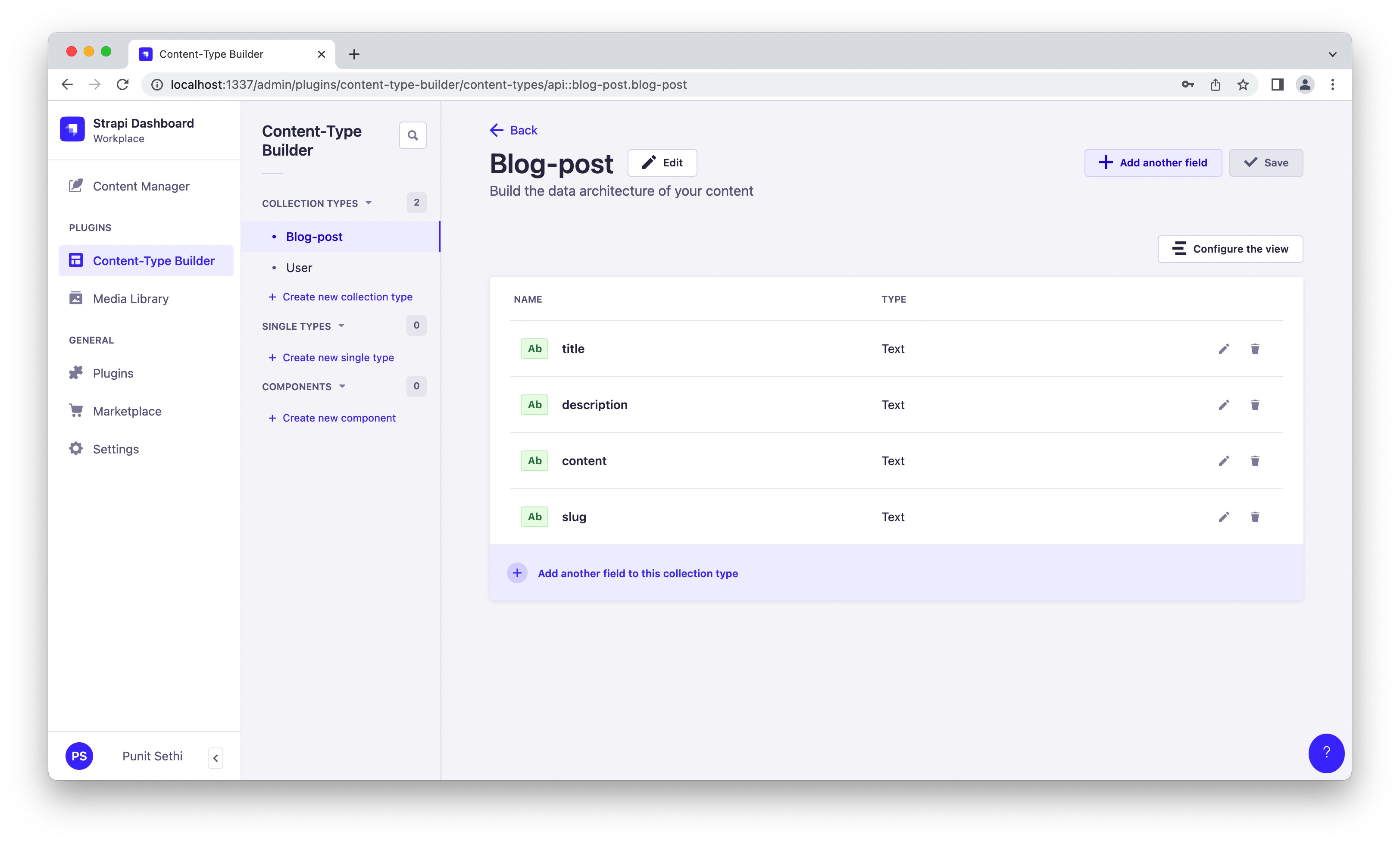

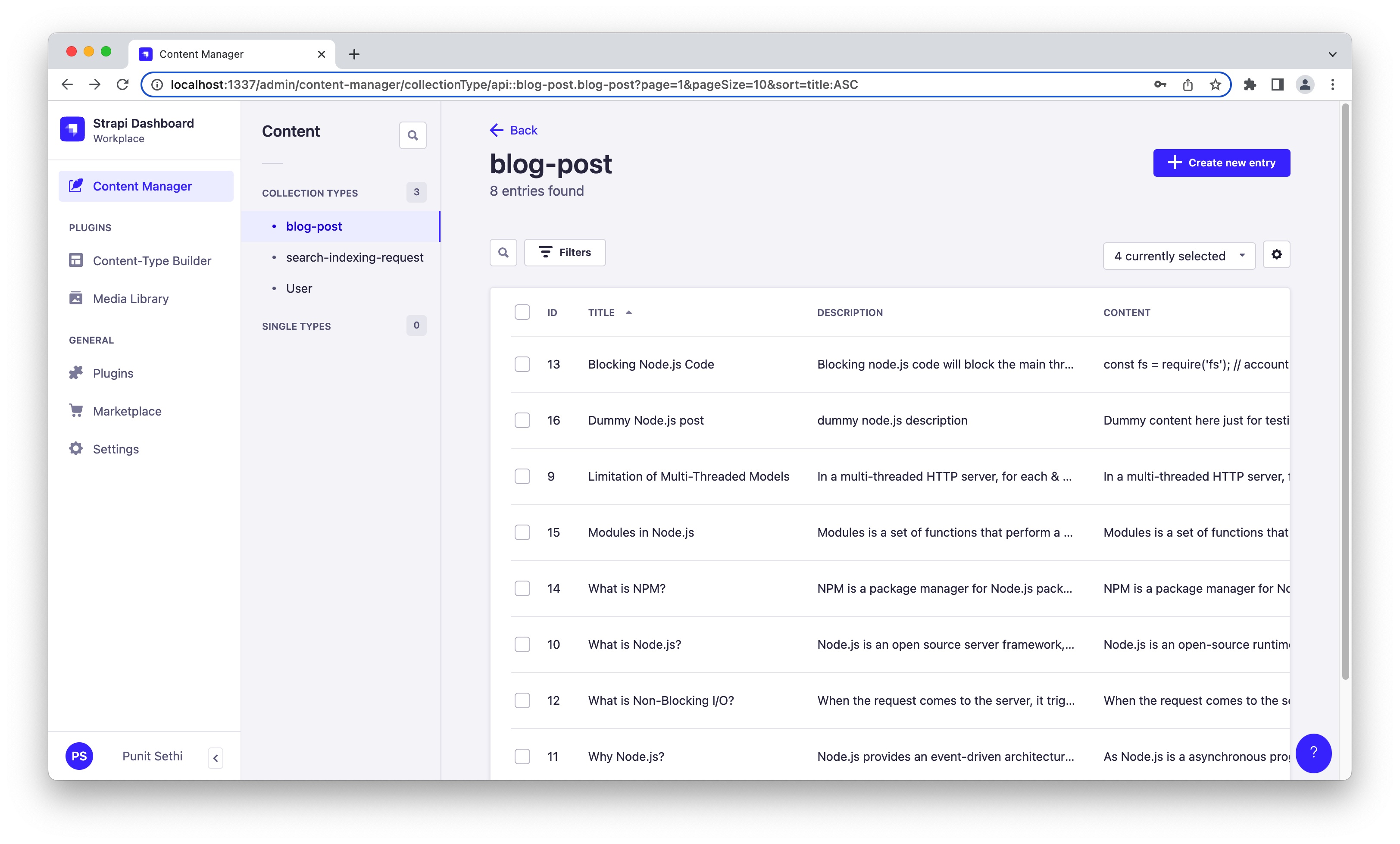

For the purpose of this post & the supplementary code, I created a collection blog-post with the following fields:

When invoking Elasticsearch’s API to index the data, we need to pass to ElasticSearch the following items:

- Document ID (A unique identifier for each indexed item) :

With Strapi, a combination of the collection’s

singularNameand the record id serves this purpose well. For example - for the first record within our blog post will get indexed asblog-post::1and so on. - Content : The content that we want index and be searched for (eg -

blog-postfieldstitle,description,content). - Additional Information : The content that we want to add to Elasticsearch so that it is returned with the search results but is not used as search criteria (eg -

blog-postfieldslug).

4. Connecting to Elasticsearch from Strapi

- It is ideal to have all the Elasticsearch specific code in a separate folder. We create an

elasticfolder at the top level within our repository:

mkdir elastic

- To invoke Elasticsearch APIs from our code, we need to import the installed Elasticsearch’s certificate into our repo. On my local, I did so via:

mkdir elastic/certs

cp /opt/elasticsearch-8.7.0/config/certs/http_ca.crt elastic/certs/local.crt

- As we run the CMS on other environments (eg - dev, staging, production), we can copy the Elasticsearch certificates from those environments into our

elastic/certsfolder. We control which certificate to use via the.envvariable depending on the environment. - Next, we create

elastic/elasticClient.js. All our code to interact with Elasticsearch will go in here. - Let’s start with the code to initialize the Elasticsearch Javascript client:

const { Client } = require('@elastic/elasticsearch')

const fs = require('fs')

const path = require('path');

let client = null;

function initializeESClient(){

try

{

client = new Client({

node: process.env.ELASTIC_HOST,

auth: {

username: process.env.ELASTIC_USERNAME,

password: process.env.ELASTIC_PASSWORD

},

tls: {

ca: fs.readFileSync(path.join(__dirname, process.env.ELASTIC_CERT_NAME)),

rejectUnauthorized: false

}

});

}

catch (err)

{

console.log('Error while initializing the connection to ElasticSearch.')

console.log(err);

}

}

module.exports = {

initializeESClient

}

- Note that we need to setup our

.envwith the following:

####Start : Elastic Search specific items

ELASTIC_HOST="https://127.0.0.1:9200"

ELASTIC_USERNAME="elastic"

ELASTIC_PASSWORD="<enter-es-password-here>"

ELASTIC_INDEX_NAME="blog-example-search-index"

ELASTIC_CERT_NAME="certs/local.crt"

####End : Elastic Search specific items

- Let’s add our

elasticClientto our Strapi entry point file atsrc/index.jsand make theelasticClient.initializeESClient();call during Strapi bootstrap. This will initialize the connection to Elasticsearch when the Strapi CMS starts.

'use strict';

const elasticClient = require('../elastic/elasticClient');

module.exports = {

register(/*{ strapi }*/) {},

bootstrap({ strapi }) {

elasticClient.initializeESClient();

},

};

5. Indexing data from Strapi CMS into Elasticsearch

5.1 Code to invoke the Elasticsearch indexing APIs

- We now add a bunch of functions to our

elastic/elasticClient.jsthat enable us to pass our Strapi CMS data into Elasticsearch for indexing.

async function indexData({itemId, title, description, content, slug}){

try

{

await client.index({

index: process.env.ELASTIC_INDEX_NAME,

id: itemId,

document: {

slug, title, description, content

}

})

await client.indices.refresh({ index: iName });

}

catch(err){

console.log('Error encountered while indexing data to ElasticSearch.')

console.log(err);

throw err;

}

}

async function removeIndexedData({itemId}) {

try

{

await client.delete({

index: process.env.ELASTIC_INDEX_NAME,

id: itemId

});

await client.indices.refresh({ index: process.env.ELASTIC_INDEX_NAME });

}

catch(err){

console.log('Error encountered while removing indexed data from ElasticSearch.')

throw err;

}

}

- The

indexData()may be called to add new data or update an existing record within our Elasticsearch index. TheremoveIndexedData()may be called to remove an already indexed item.

5.2 Strapi collection to store indexing requests

Whenever a blog-post item within Strapi is updated, we seek invoke the previously defined indexData() function. However, directly calling the indexData() may not be ideal since there could be large number of indexing requests and the Elasticsearch may be slow to respond.

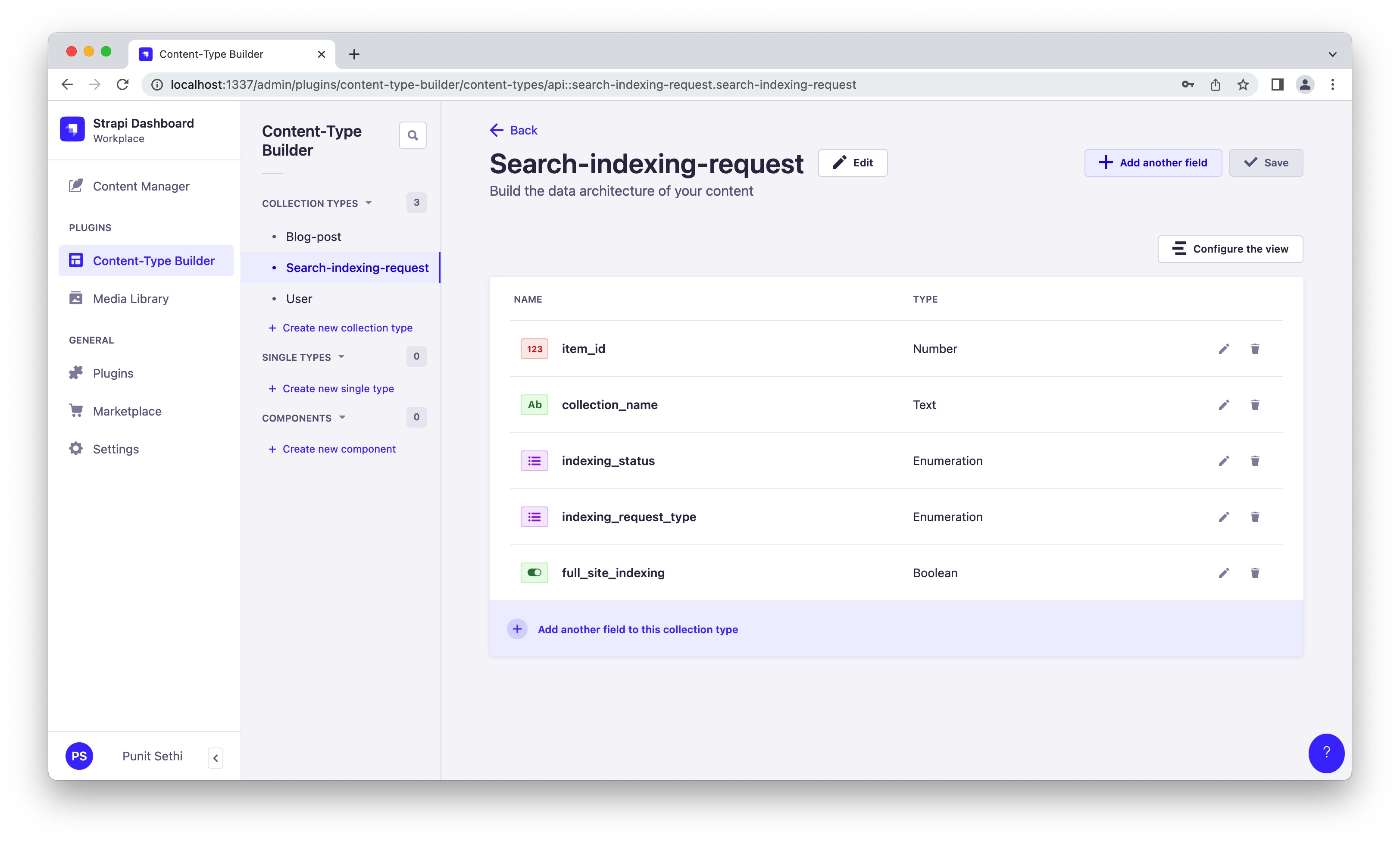

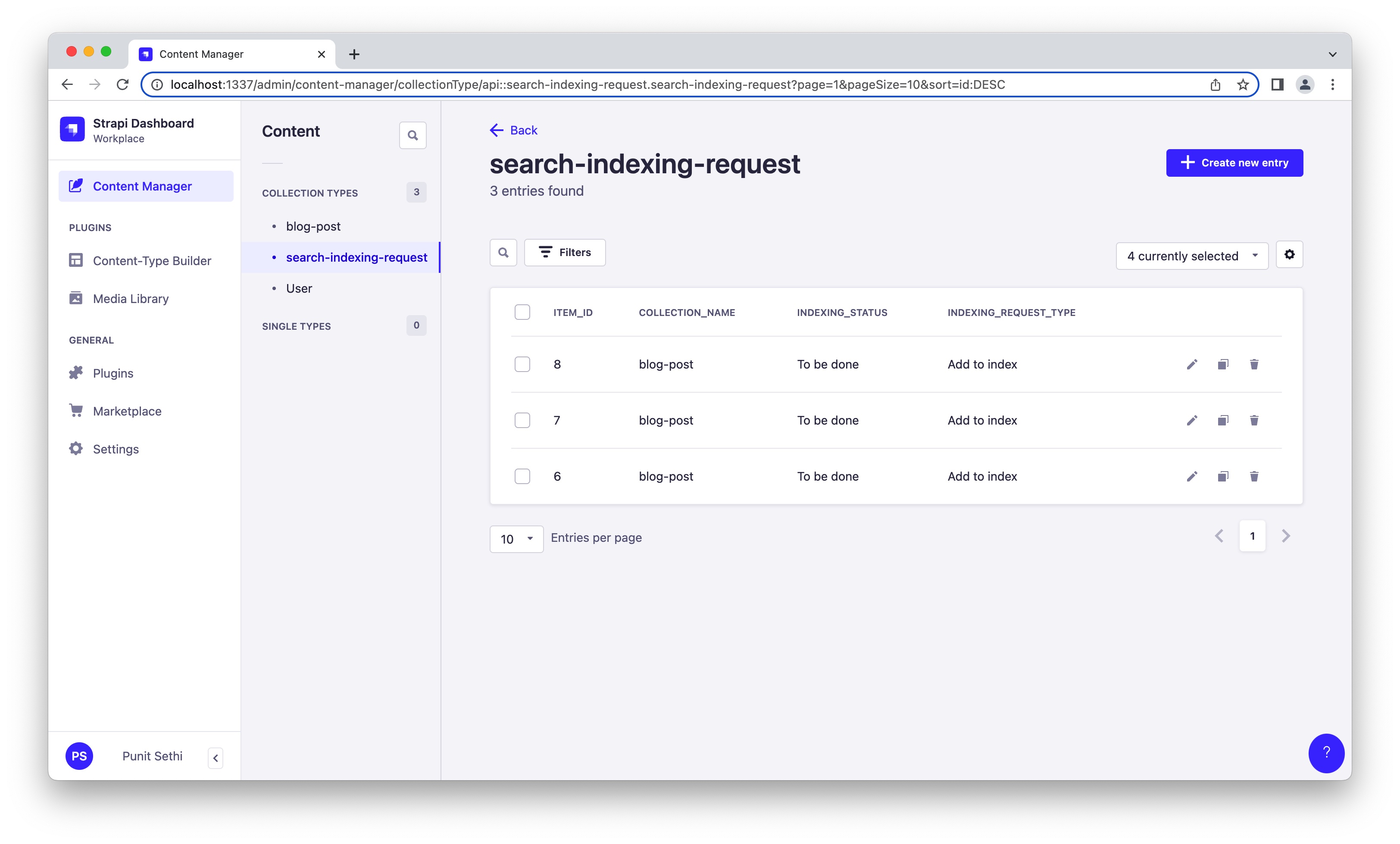

As a result, we seek to make Elasticsearch indexing asynchronous. We do so by storing all the indexing requests within a Strapi collection called search-indexing-requests. We define it as following:

5.3 Leveraging Strapi lifecycle hooks to add indexing requests

We now need to write code within Strapi’s lifecycle hooks afterUpdate and afterDelete. This code will add entries to our just created search-indexing-requests collection to be picked up for indexing. The below code will go into src/api/blog-post/content-types/blog-post/lifecycles.js.

module.exports = {

async afterUpdate(event){

if (event?.result?.publishedAt)

{

strapi.entityService.create('api::search-indexing-request.search-indexing-request',

{

data: {

item_id: event.result.id,

collection_name: event.model.singularName,

indexing_status: "To be done",

indexing_request_type: "Add to index",

full_site_indexing: false

}

});

}

},

async afterDelete(event){

strapi.entityService.create('api::search-indexing-request.search-indexing-request',

{

data: {

item_id: event.result.id,

collection_name: event.model.singularName,

indexing_status: "To be done",

indexing_request_type: "Delete from index",

full_site_indexing: false

}

});

}

}

With the code above in place, the search-indexing-request collection will get populated as we publish the data within our blog-post collection. Notice the values for indexing_status and indexing_request_type fields:

5.4 Setting up the cron job to process search indexing requests

Next up, we need a cron job that can run periodically to process all the To be done rows from our search-indexing-requests table.

- We create a new task within our

config/cron-tasks.jsas following:

const { performIndexingForSearch } = require('../elastic/cron-search-indexing');

module.exports = {

performIndexingForSearch: {

task: async({strapi}) => {

return await performIndexingForSearch({strapi});

},

options: {

rule: "00 23 * * *", //run daily at 11:00 PM

},

}

}

- We add the just created cron task to our

config/server.js:

const cronTasks = require('./cron-tasks');

module.exports = ({ env }) => ({

...

...

cron: {

enabled: true,

tasks: cronTasks

}

});

- Let’s look at the code within the

performIndexingForSearch()that processes the records fromsearch-indexing-requests:

const { indexData, removeIndexedData } = require('./elasticClient');

module.exports = {

performIndexingForSearch: async ({ strapi }) => {

const recs = await strapi.entityService.findMany('api::search-indexing-request.search-indexing-request', {

filters: { indexing_status : "To be done"},

});

for (let r=0; r< recs.length; r++)

{

const col = recs[r].collection_name;

if (recs[r].item_id)

{

if (recs[r].indexing_type !== "Delete from index")

{

const api = 'api::' + col + '.' + col

const item = await strapi.entityService.findOne(api, recs[r].item_id);

const indexItemId = col + "::" + item.id;

const {title, description, content, slug} = item;

await indexData({itemId : indexItemId, title, description, content, slug})

await strapi.entityService.update('api::search-indexing-request.search-indexing-request', recs[r].id, {

data : {

'indexing_status' : 'Done'

}

});

}

else

{

const indexItemId = col + '::' + recs[r].item_id;

await removeIndexedData({itemId : indexItemId})

await strapi.entityService.update('api::search-indexing-request.search-indexing-request', recs[r].id, {

data : {

'indexing_status' : 'Done'

}

});

}

}

else

{

//TBD : Code to index the entire collection.

}

}

}

}

- The

performIndexingForSearch()that is set to run once every 24 hours does three things:- It reads all the

To be donerecords fromsearch-indexing-request - It sequentially processes the read records. It does so by invoking

elasticClient.indexData()orelasticClient.removeIndexedData()based onindexing_request_typevalue. - It then updates the

indexing_statusfor the record toDone

- It reads all the

6. Serving search requests

6.1 Code to invoke the Elasticsearch search API

With our search indexing in place, it is time for us to write code that can invoke Elasticsearch’s search API to fetch the matching results. We write this within our elastic/elasticClient.js

async function searchData(searchTerm){

try

{

const result= await client.search({

index: process.env.ELASTIC_INDEX_NAME,

size: 100,

query: {

bool : {

"should" : [

{

"match" : {

"content" : searchTerm

}

},

{

"match" : {

"title" : searchTerm

}

},

{

"match" : {

"description" : searchTerm

}

}

]

}

}

});

return result;

}

catch(err)

{

console.log('Search : elasticClient.searchData : Error encountered while making a search request to ElasticSearch.')

throw err;

}

}

- With the above code, we seek to search for the provided term within the indexed

title,descriptionandcontentfields. Results with matches in any of these fields will be returned.

6.2 Creating a Strapi route to front the Elasticsearch search:

Next up, we create a Strapi CMS route that can invoke the above mentioned elasticClient.searchData and return the matches to the client.

- To do so, we create a new API via

npx strapi generate apiwith the namesearch. This shall generate a bunch of files within thesrc/api/searchfolder. - Within the

src/api/search/routes/search.jsfile, we create a custom route that will serve our search requests:

module.exports = {

routes: [

{

method: 'GET',

path: '/search',

handler: 'search.performSearch',

config: {

policies: [],

middlewares: [],

},

},

],

};

- The

performSearchneeds to be defined withinsrc/api/search/controllers/search.jsas following:

'use strict';

const { searchData } = require('../../../../elastic/elasticClient');

module.exports = {

performSearch: async (ctx, next) => {

try {

if (ctx.query.search)

{

const resp = await searchData(ctx.query.search);

if (resp?.hits?.hits)

{

const specificFields = filteredMatches.map((data) => {

const dt = data['_source'];

return {title: dt.title, slug: dt.slug, description: dt.description }

})

ctx.body = specificFields;

}

else

ctx.body = {}

}

else

ctx.body = {}

} catch (err) {

ctx.response.status = 500;

ctx.body = "An error was encountered while processing the search request."

console.log('An error was encountered while processing the search request.')

console.log(err);

}

}

};

- The

performSearchsimply fetches the search results from the Elasticsearch (viaelasticClient.searchData) and returns it to the client.

6.3 Setting up permissions for the search API:

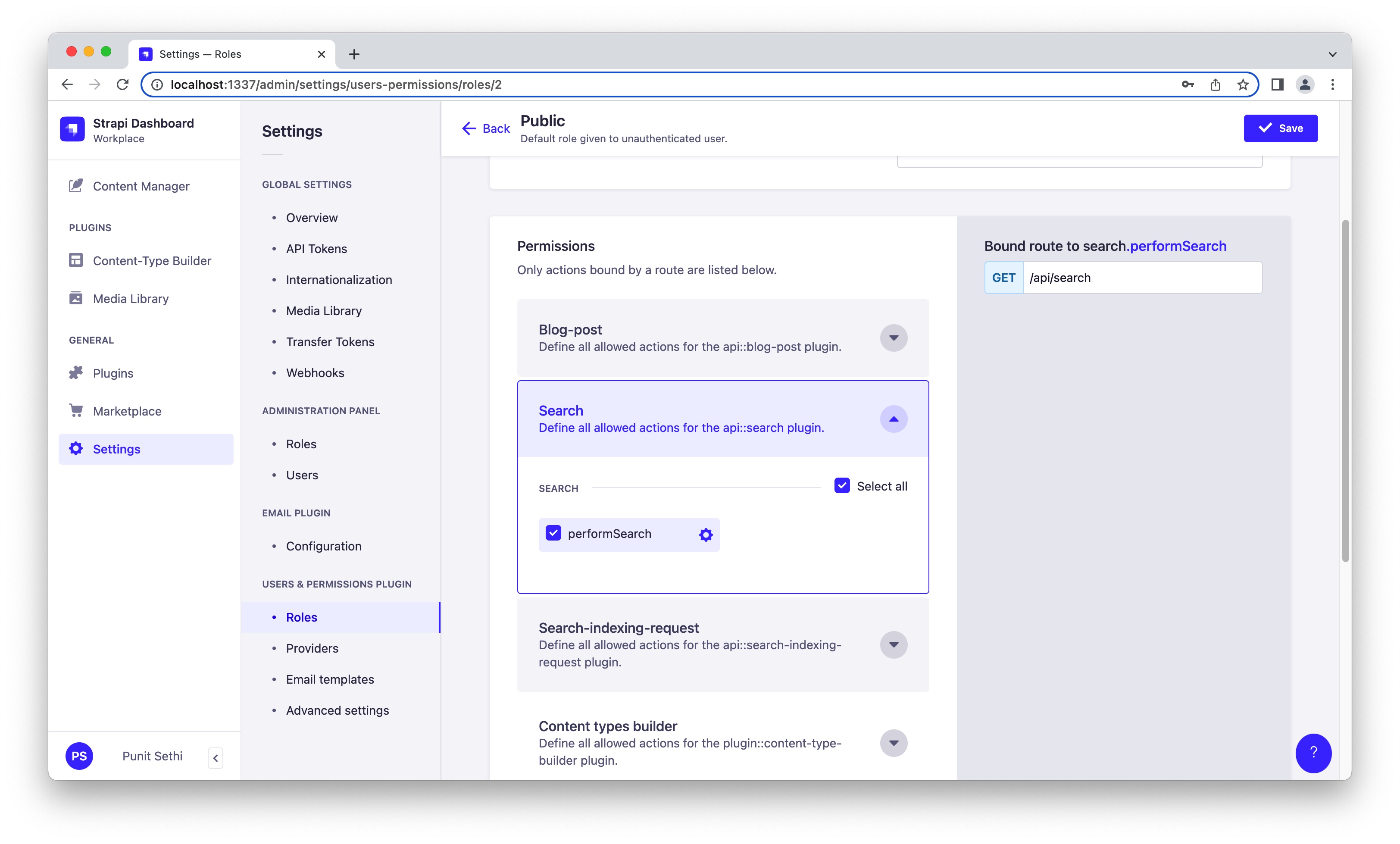

Four our blog-post example, we are building a publicly accessible search, so I set the just defined /search route accessible to the role Public.

7. Verifying our search integration:

At this point, we can check if the data we enter within our blog-post collection is searchable. To do so, we need to create a few entries within our CMS.

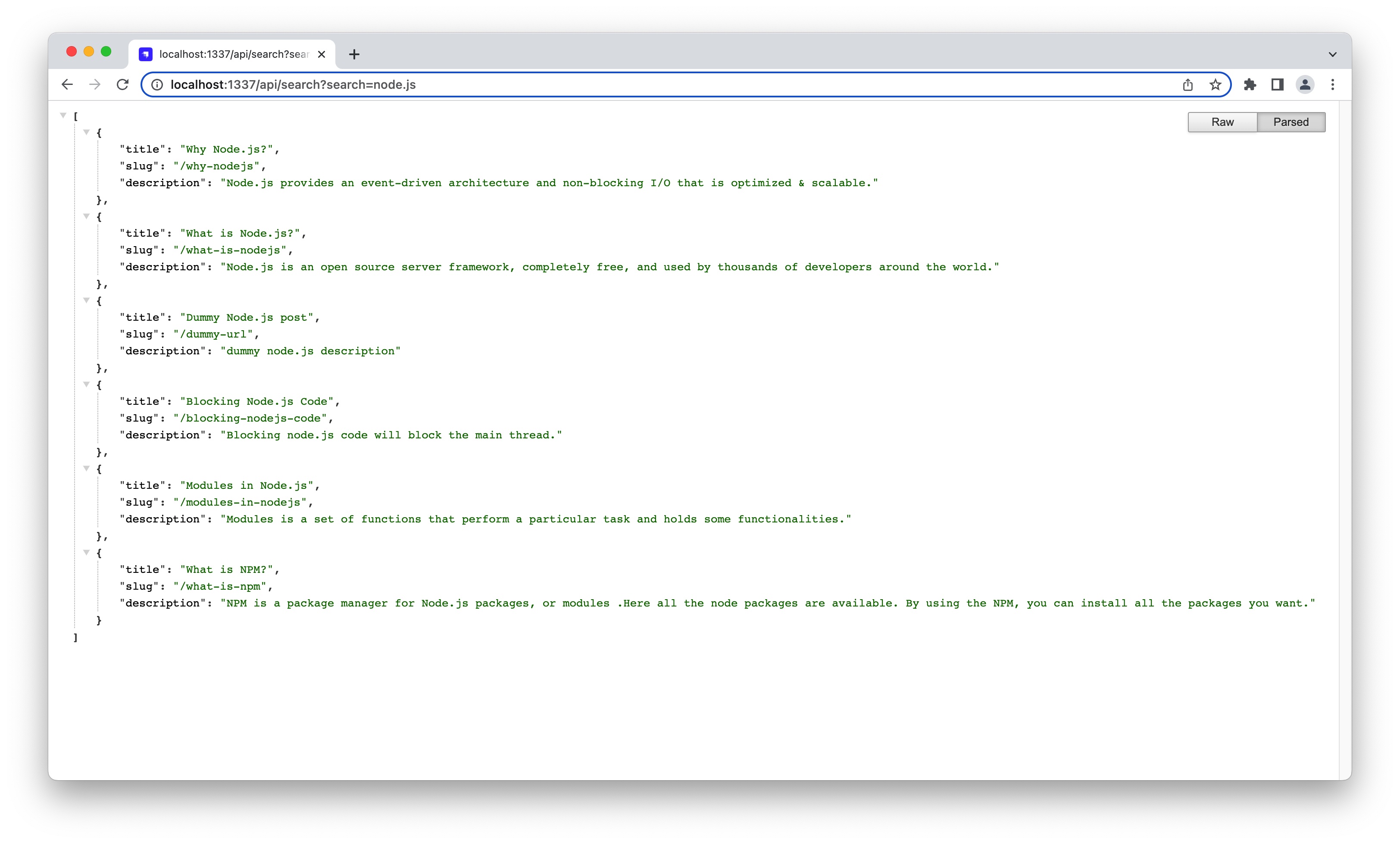

We then search for various terms via our search API like http://localhost:1337/api/search?search=node.js and check the results:

8. Adding more features to our search integration:

While this post demonstrates the basic constructs of setting up Elasticsearch with Strapi, there’s a lot more that can be done to build a mature integration. Below are some example enhancements:

- Set the collections & fields to be indexed as configurable so that new collections and fields can be set to be indexed without code changes.

- Leverage Strapi middleware instead of lifecycle hooks for marking the items to index to improve code reusability & maintainence.

- Add an ability to re-index complete Strapi collections. Leverage Elasticsearch alias to enable rebuild & sync indexes without any downtime.

- Enable pagination, filtering, sorting, access-control and field population for the

/searchAPI.

Each of these enhancements can be built on top of the basic constructs of the Strapi - Elasticsearch integration detailed in this post.

Need help with Strapi setup, upgrade, or customization?

I've been a Strapi specialist since 2021 (v3).

My Strapi work includes:

- Published 2 plugins on the Strapi marketplace

- Written 4+ articles featured in the official Strapi newsletter

- Contributed fixes to the Strapi core repo

- Implemented, upgraded, and customized Strapi setups for multiple teams

My Clients Include:

Or email: punit@tezify.com

- Published 2 plugins on the Strapi marketplace

- Written 4+ articles featured in the official Strapi newsletter

- Contributed fixes to the Strapi core repo

- Implemented, upgraded, and customized Strapi setups for multiple teams

Hire Me

Hire Me